Abstract

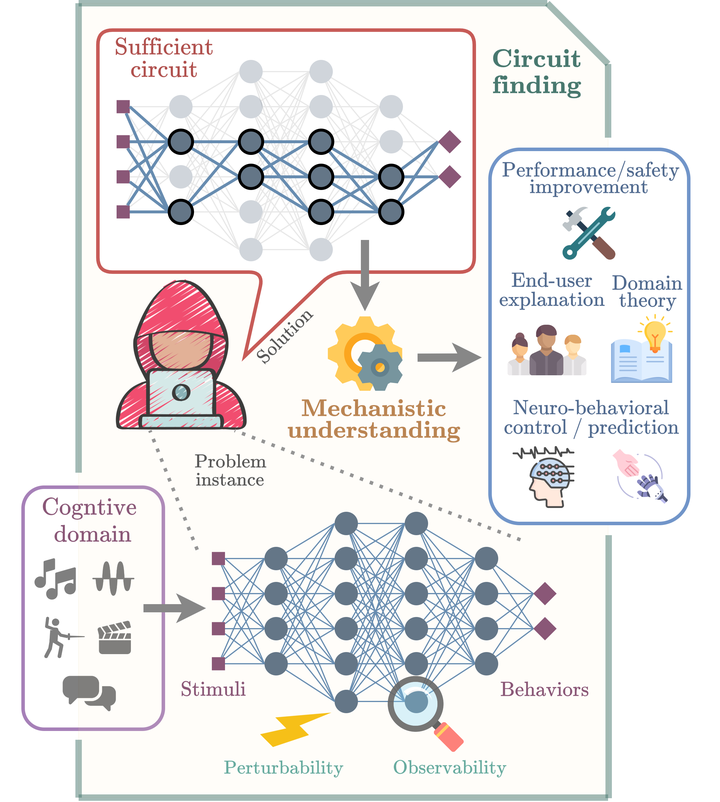

Emerging folklore in the cognitive sciences suggests that interpretability techniques to reverse-engineer artificial neural networks (ANNs) could speed up discovery and theory-building. For many researchers in psychology, linguistics, neuroscience, and artificial intelligence (AI), the full observability and perturbability of ANNs trained on complex tasks affords a shortcut to domain insights, cognitive theories, neurocognitive models, application improvement, and user safety. Folklore intuitions, however, are typically disconnected from other relevant knowledge. Here we examine these intuitions formally by drawing relevant connections to computational complexity theory. We model interpretability queries computationally and analyze their resource demands for biological/artificial high-level cognition. We prove mathematically that, contrary to folklore, basic circuit-finding queries in classic ANNs are already infeasibly demanding to answer even approximately. We discuss how interdisciplinary integration can mitigate this disconnect and situate the broader implications for the cognitive sciences, the philosophy of AI-fueled discovery, and AI ethics.